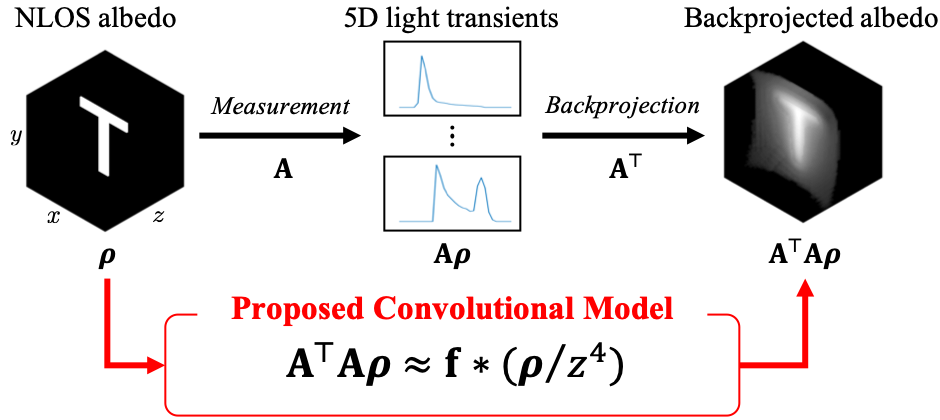

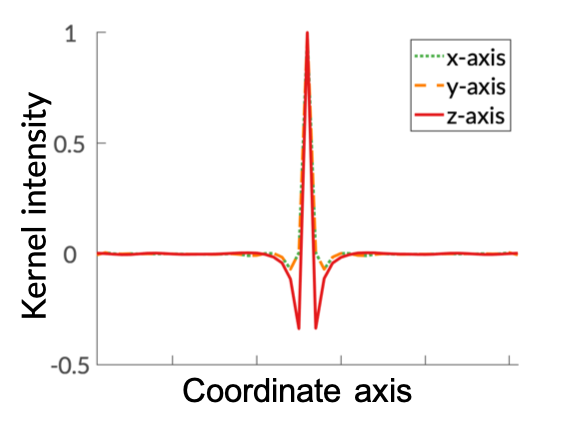

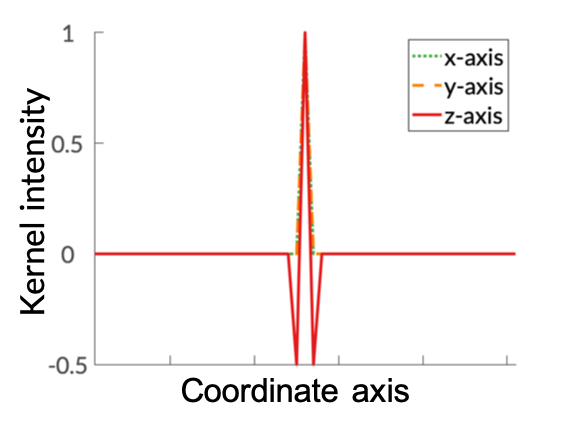

Our main technical result is to show that, under certain assumptions on the imaging geometry, the Gram of the NLOS measurement operator is a convolution operator. This result advances NLOS imaging in three important ways. First, it allows us to efficiently obtain the ellipsoidal tomography reconstruction by solving an equivalent linear least-squares involving the Gram operator: As the Gram operator is convolutional, this problem can be solved using computationally-efficient deconvolution algorithms. Second, it provides a theoretical justification for the filtered backprojection algorithm: We can show that filtered backprojection corresponds to using an approximate deconvolution filter to solve the problem involving the Gram operator. Third, it facilitates the use of a wide range of priors to regularize the NLOS reconstruction problem: The convolutional property of the Gram operator implies that the corresponding regularized least squares remain computationally tractable.

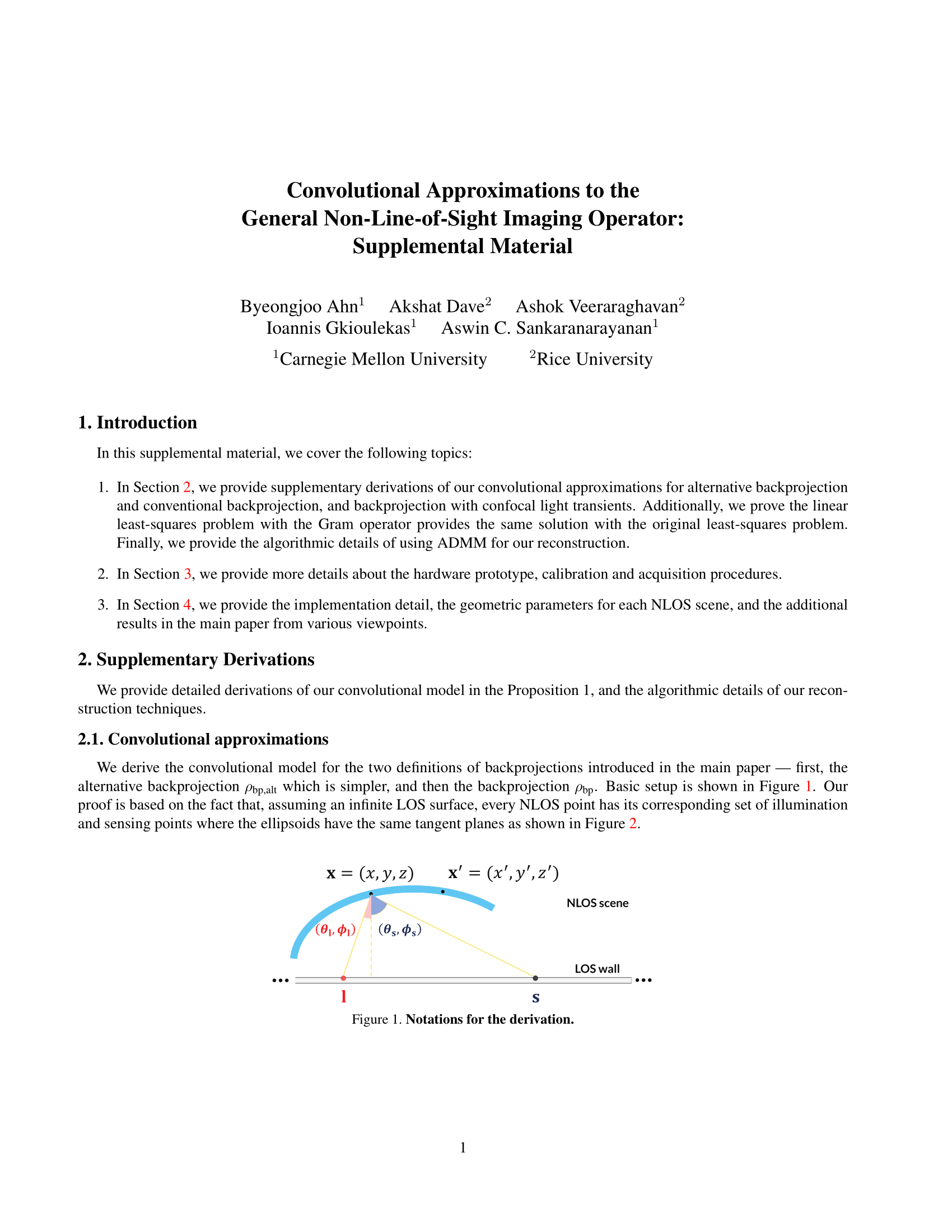

NLOS imaging setup