EpiScan3D

Supreeth Achar, Matthew O'Toole, Srinivas G. Narasimhan, Kiriakos N. Kutulakos.

ACM Transactions on Graphics (SIGGRAPH) 2015.

SIGGRAPH 2015 Supplemental Video

Imaging Capabilities

The Episcan3D sensor's robustness to different conditions results from its unique imaging capabilites. The sensor can capture images where most light from ambient sources (like the Sun) is blocked out. It can also selectively image light paths based on their type (single or multiple bounce) or based on the range of depths they correspond to.

Blocking Ambient Light

The depth cameras that are available on the market today only work indoors. Outside, their active light sources are overwhelmed by sunlight. Episcan3D uses a unique imaging technique that blocks almost ambient light. This allows its low power source to compete with the Sun. This opens up all sorts of exciting new applications for depth cameras such as outdoor gesture recognition and mobile robotics.

Selecting Light Paths

Episcan3D can differentiate between single-bounce light that reflects directly off surfaces and more complex multiple-bounce light paths that involve interreflection and scattering. The presence of complex light paths confuses most conventional 3D scanners. Episcan3D is robust to these types of light paths and so it can be used to scan optically challenging objects

Episcan3D can also selectively capture light paths based on the range of depths they correspond to. This can be used to see through smoke and fog.

Applications

Episcan3D is a low power sensor that works in challenging conditions. It has potential applications in industrial inspection, consumer electronics and mobile robotics.

Scanning Difficult Objects

Objects with concavities, metallic surfaces, and translucent materials are difficult to scan because of inter reflections and scattering. Episcan3D can scan these types of objects accurately because it can selectively block indirect light paths.

Episcan3D could be used in 3d printing systems or in quality control applications to inspect metallic parts.

[gallery type="flexslider" columns="1" size="medium" ids="246,245,244,243,242,241"]

Gesture Recognition Outdoors

Contemprary gesture recognition systems such as the Microsoft Kinect, Intel RealSense and Leap Motion work indoors where there is little ambient light but not outdoors in Sunlight.

Episcan3D's energy-efficient, real time depth sensing technology works both indoors and outside and could power the next generation of gesture recognition systems that fit inside tablets and phones and work robustly everywhere.

Mobile Robotics

Passive stereo is commonly used for depth sensing in mobile robotics. Since passive stereo needs visual texture it breaks down in textureless regions and in shadows resulting in incomplete depth maps.

Episcan3D's energy-efficient active stereo provides a way to fill out the shadows and recover depth even in textureless regions.

Mobile robotics experiments and videos courtesy Joe Bartels

Seeing through Smoke

Imaging and depth in the presence of fog, smoke or turbid water is challenging because light scatters volumetrically through the medium. Episcan3D can block scattered light and detect ballistic photons that make it through the medium without scattering. This allows for imaging and depth estimation under water and through smoke.

How it Works

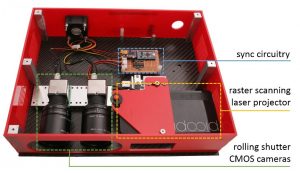

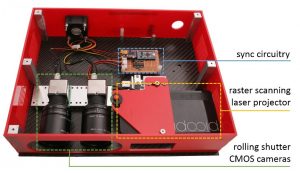

Our Episcan3D prototype is built from readily available, off-the-shelf components.

- a 30 lumen raster scanning projector

- a pair rolling shutter CMOS cameras

- a microcontroller for synchronization

All the parts used in our prototype could be replaced with smaller equivalents. Small CMOS rolling shutter cameras are ubiquitous and the optical engine of a raster scanning projector occupies only a few cubic centimeters. A product based on our prototype's working principle could fit into a laptop, tablet or cellphone.

The Working Principle

The Episcan3D sensor's raster scanning projector illuminates the scene one scanline at a time. The cameras and projector are aligned so that by epipolar geometry, one projector scanline corresponds to a single row of pixels in each camera. The rolling shutter on the cameras are synchronized so that the exposed row moves in lockstep with the active projector scanline.

The projector takes only 20 microseconds to draw a scanline, so the exposure for each camera row is also very short. This short exposure integrates very little ambient light while still collecting all the light from the projector. Only light paths that follow the epipolar constraint between the projector to camera reach the camera sensor, this blocks almost all multipath light.

More Details

For an in-depth description of the technology behind the Episcan3D sensor, please refer to our paper and the accompanying video

M. O'Toole, S. Achar, S.G. Narasimhan, K.N. Kutulakos. "Homogeneous Codes for Energy-Efficient Illumination and Imaging", ACM SIGGRAPH 2015

About

The Episcan3D sensor is the result of a collaborative effort between researchers at the University of Toronto and Carnegie Mellon University.

Awards

The Episcan sensor has been demonstrated at computer vision and graphics conferences and has won awards for best demo.

"Energy-Efficient Structured Light Imaging"

S. Achar, M. O'Toole, K.N. Kutulakos and S.G. Narasimhan

- Best Demo Award at ICCP 2015

- A9 Best Demo Award at CVPR 2015

Sponsors

O’Toole and Kutulakos gratefully acknowledge the support of the QEII-GSST program, and the Natural Sciences and Engineering Research Council of Canada under the RGPIN, RTI, and SGP programs. Achar and Narasimhan received support in parts by US NSF Grants 1317749 and 0964562, and by the US Army Research Laboratory (ARL) Collaborative Technology Alliance Program, Cooperative Agreement W911NF-10-2-0016.