Abstract

Optics designers use simulation tools to assist them in designing lenses for various applications. Commercial tools rely on finite differencing and sampling methods to perform gradient-based optimization of lens design objectives. Recently, differentiable rendering techniques have enabled more efficient gradient calculation of these objectives. However, these techniques are unable to optimize for light throughput, often an important metric for many applications.

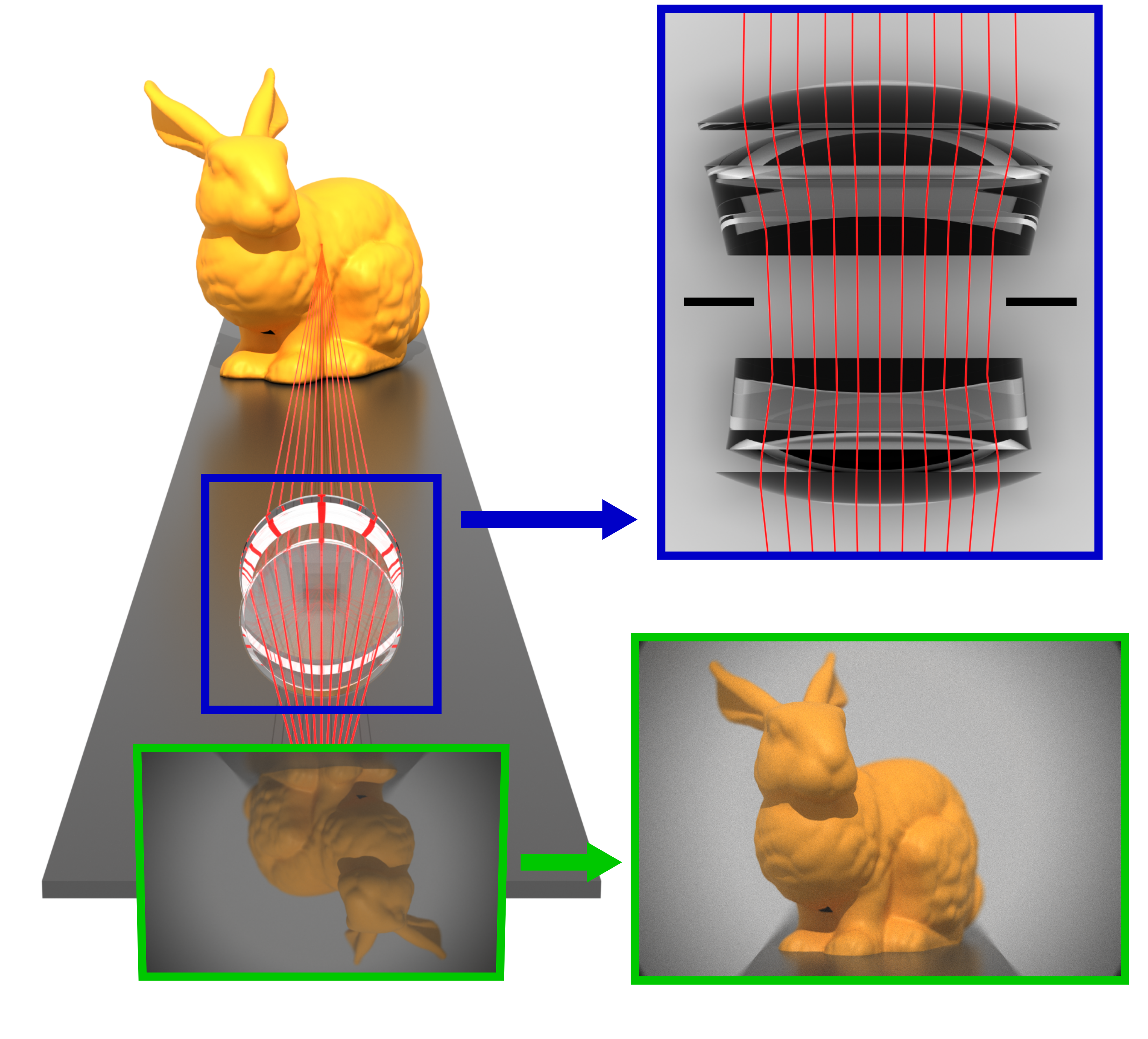

We develop a method for calculating the gradients of optical systems with respect to both focus and light throughput. We formulate lens performance as an integral loss over a dynamic domain, which allows for the use of differentiable rendering techniques to calculate the required gradients. We also develop a ray tracer specifically designed for refractive lenses and derive formulas for calculating gradients that simultaneously optimize for focus and light throughput. Explicitly optimizing for light throughput produces lenses that outperform traditional optimized lenses that tend to prioritize for only focus. To evaluate our lens designs, we simulate various applications where our lenses: (1) improve imaging performance in low-light environments, (2) reduce motion blur for high-speed photography, and (3) minimize vignetting for large-format sensors.

Aperture-aware gradients

Throughput-sharpness trade-off

Low-light scenes

In low-light scenarios, optimizing for more sharpness in a lens may not yield better results in the presence of noise. Alternatively, optimizing for throughput can capture more light from the scene at the cost of some sharpness

Resources

Paper: Our paper is available on the ACM Digital Library and locally.

Poster: Our poster is available here.

Presentation: Our presentation slides are available here.

Code: Our code is available on Github.

Citation

@inproceedings{Teh2024ApertureAware,

author = {Teh, Arjun and Gkioulekas, Ioannis and O'Toole, Matthew},

title = {Aperture-Aware Lens Design},

year = {2024},

isbn = {9798400705250},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3641519.3657398},

doi = {10.1145/3641519.3657398},

booktitle = {ACM SIGGRAPH 2024 Conference Papers},

articleno = {117},

numpages = {10},

series = {SIGGRAPH '24}

}

Acknowledgments

We thank Yexin Hu for providing code for importing Zemax files to Blender, and Maysam Chamanzar for facilitating access to a Zemax license. This work was supported by the National Science Foundation under awards 1730147, 1900849, and 2238485, a Sloan Research Fellowship, and a gift from Google Research.