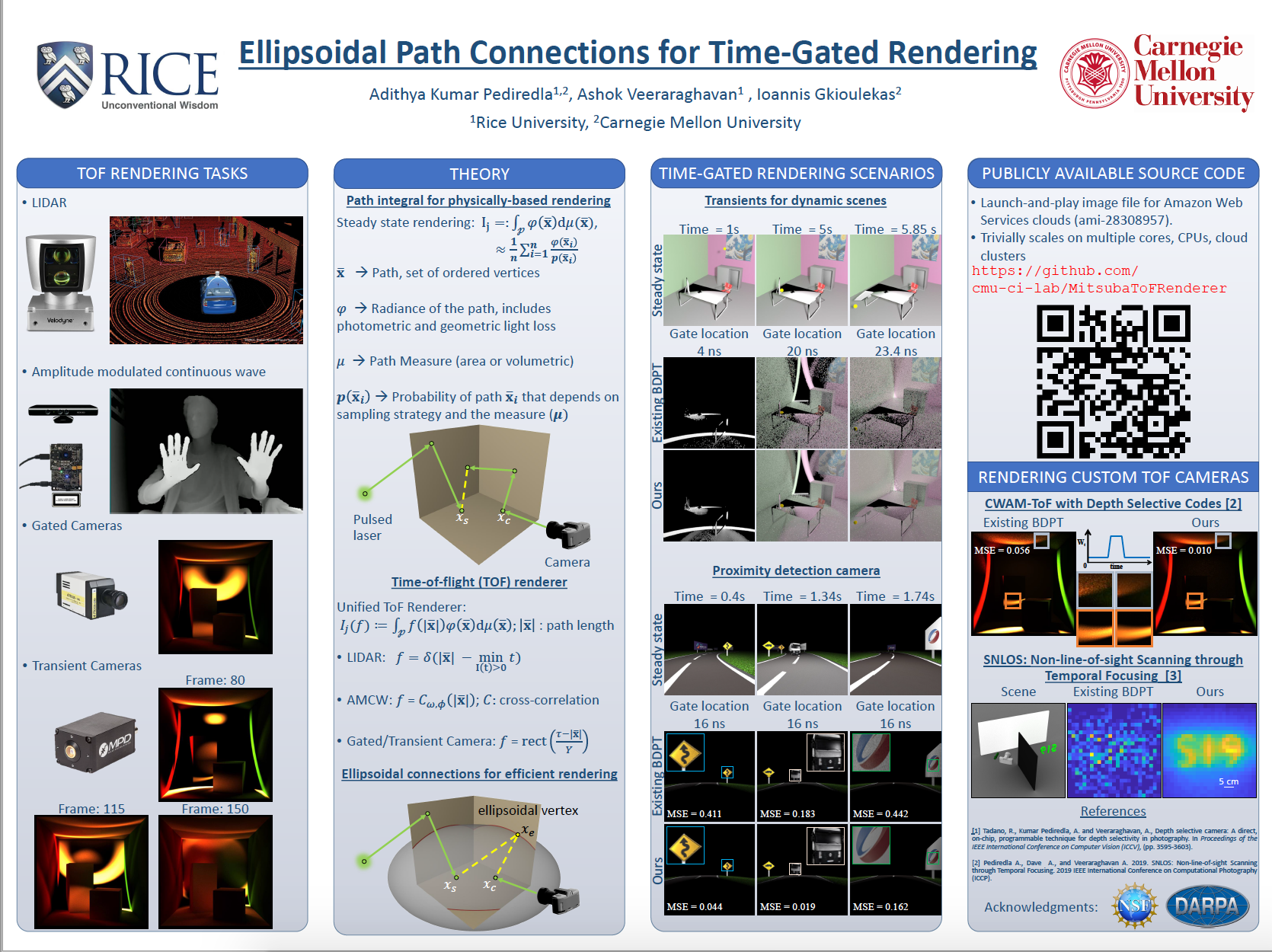

Key idea

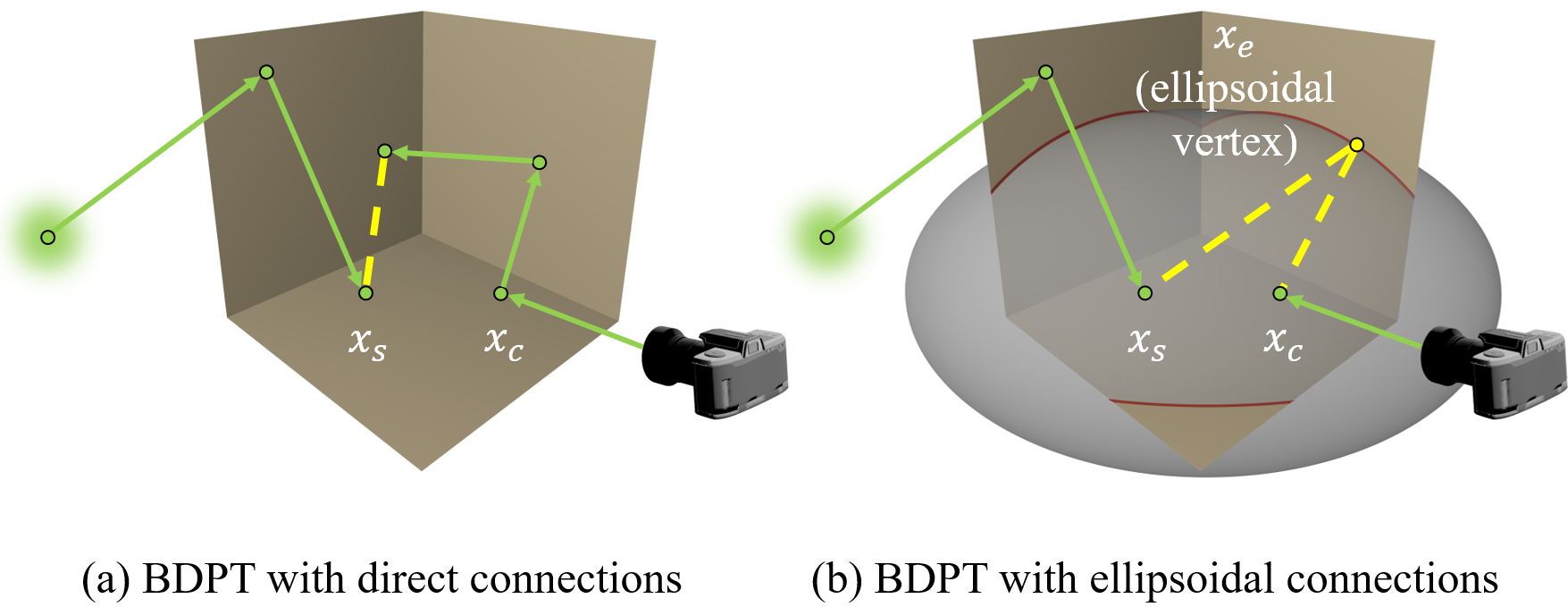

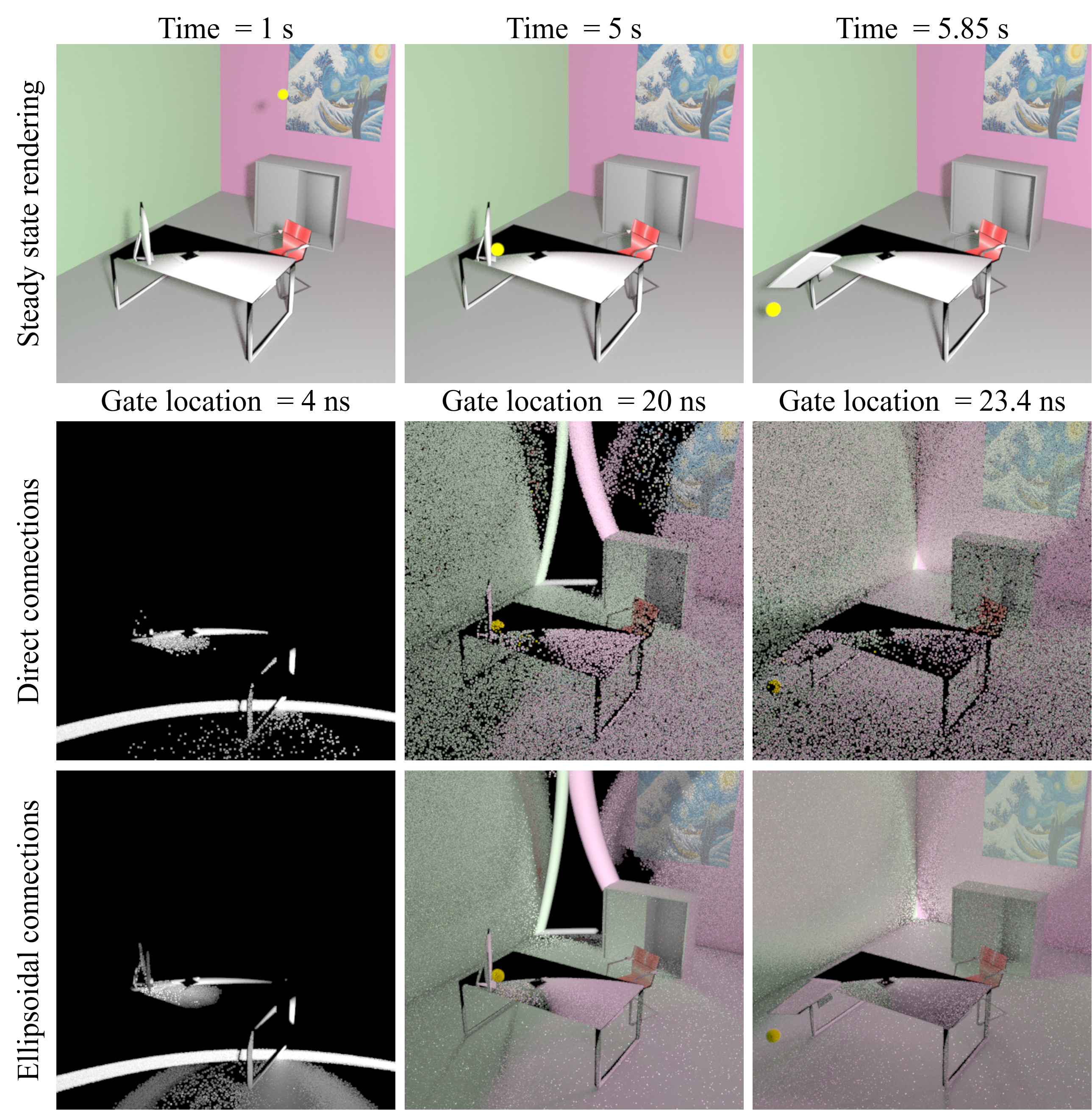

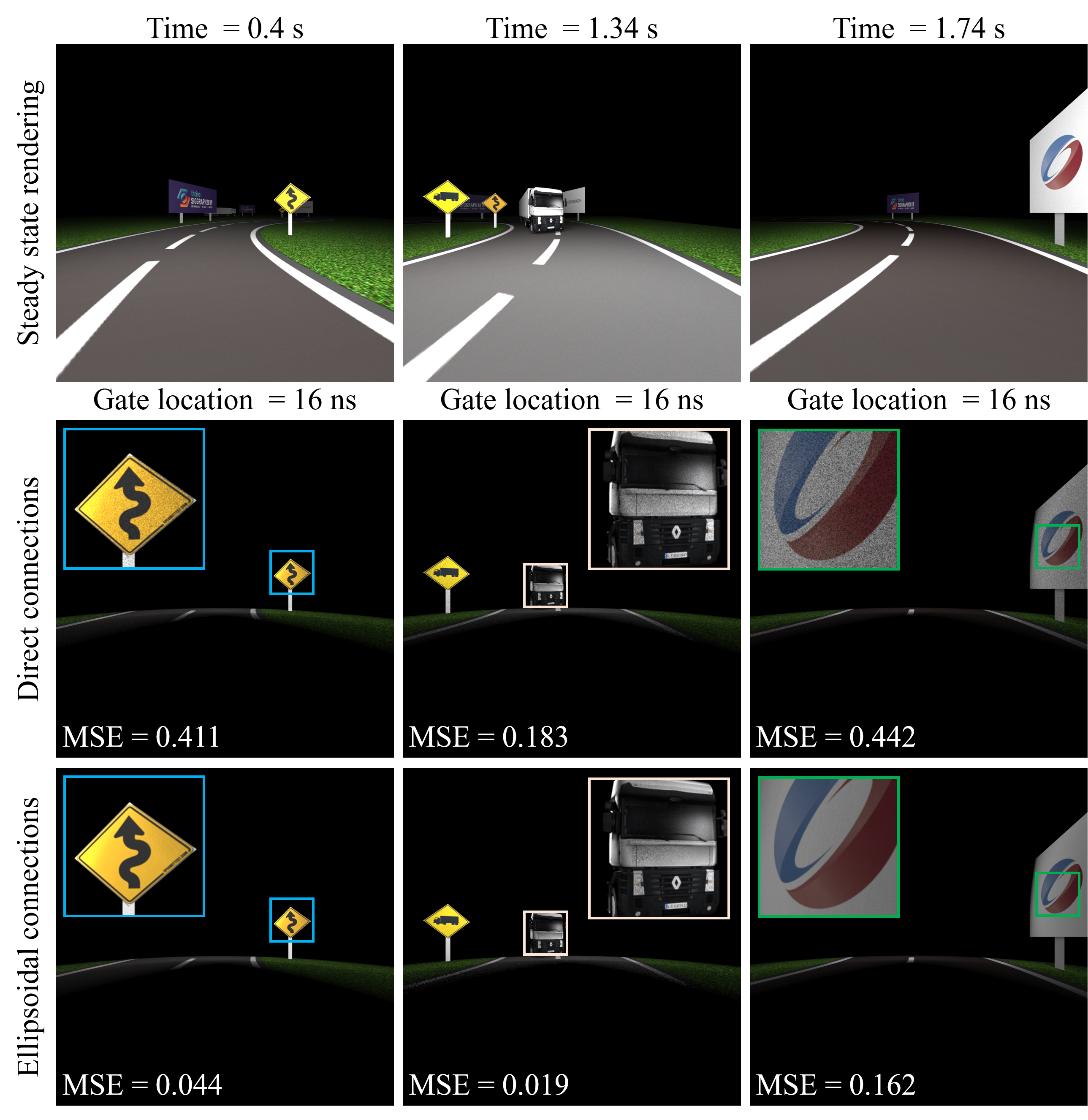

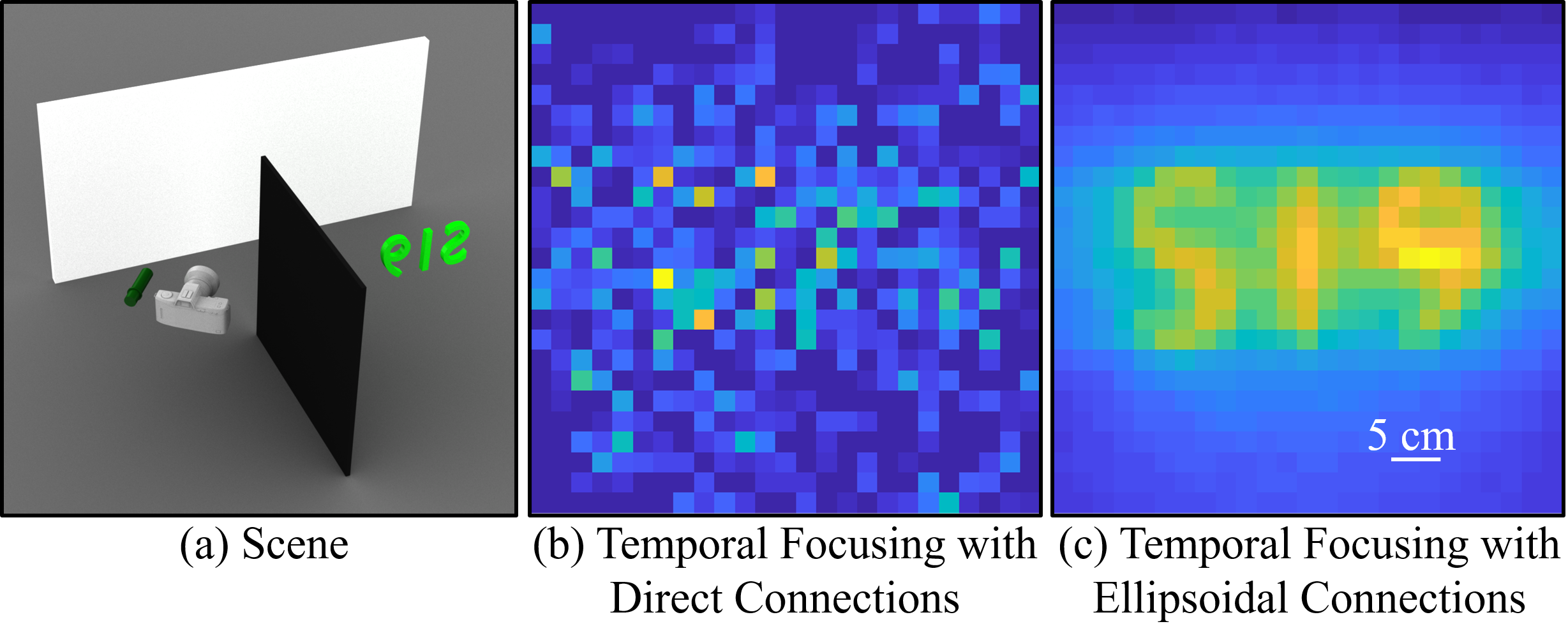

Given sampled source and camera subpaths, standard BDPT forms complete paths by directly connecting every vertex in one subpath to every vertex in the other. Unfortunately, baseline BDPT becomes very inefficient for rendering

We introduce a path sampling algorithm that helps ameliorate the inefficiency of baseline BDPT for time-gated rendering tasks. We first select a pathlength τ either by importance sampling or stratified sampling the narrow pathlength importance function. Complete paths are formed by connecting every pair of vertices in the source and camera subpaths through an additional vertex, rather than directly as in standard BDPT, with the new vertex selected so that the total pathlength equals τ.