See our DIY guide for a tutorial on how to build your own system!

Abstract

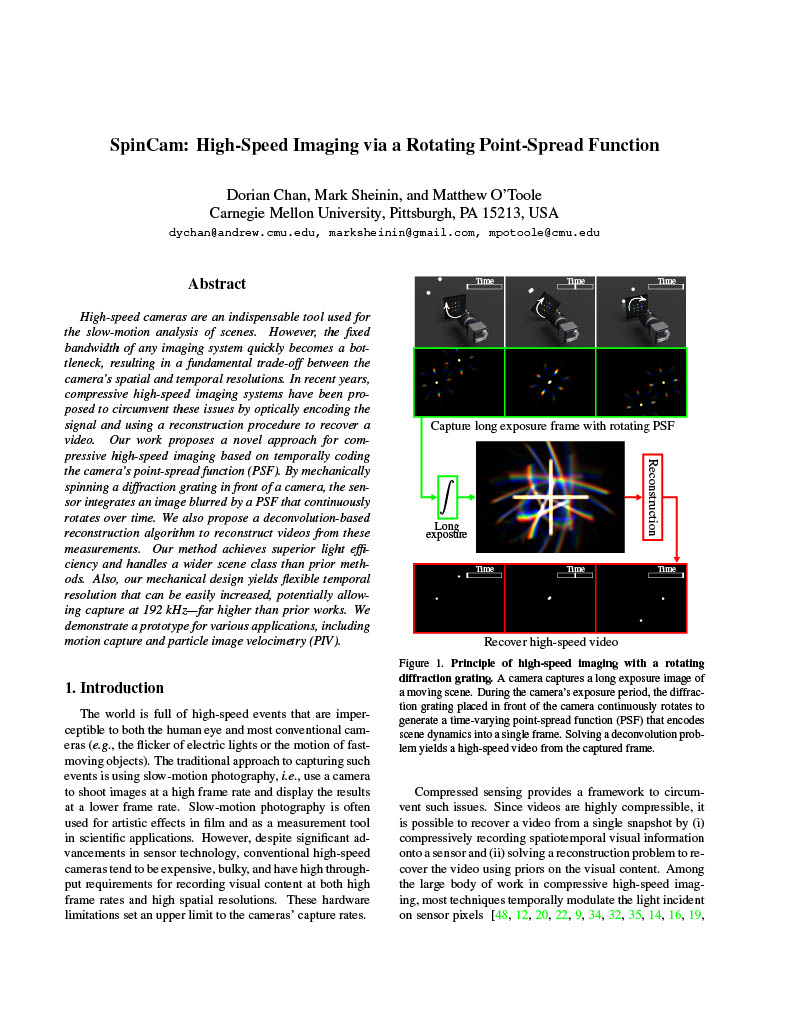

High-speed cameras are an indispensable tool used for the slow-motion analysis of scenes. The fixed bandwidth of any imaging system quickly becomes a bottleneck however, resulting in a fundamental trade-off between the camera's spatial and temporal resolutions. In recent years, compressive high-speed imaging systems have been proposed to circumvent these issues, by optically compressing the signal and using a reconstruction procedure to recover a video. In our work, we propose a novel approach for compressive high-speed imaging based on temporally coding the camera's point-spread function (PSF). By mechanically spinning a diffraction grating in front of a camera, the sensor integrates an image blurred by a PSF that continuously rotates over time. We also propose a deconvolution-based reconstruction algorithm to reconstruct videos from these measurements. Our method achieves superior light efficiency and handles a wider class of scenes compared to prior methods. Also, our mechanical design yields flexible temporal resolution that can be easily increased, potentially allowing capture at 192 kHz—far higher than prior works. We demonstrate a prototype on various applications including motion capture and particle image velocimetry (PIV).

Overview

High-speed phenomena are ubiquitous in our everyday lives. From the fluttering wings of a hummingbird to a flying ball, our world is full of fast events that are invisible to the naked eye and conventional cameras. Capturing such fast events requires high-speed cameras, but conventional devices are expensive, bulky, and require high throughput.

To get around these issues, recent work has proposed leveraging compressed sensing --- in short, it may be possible to recover a high-speed video from a single snapshot by optically coding the spatio-temporal content, and solving a reconstruction problem to recover the original video using priors. Current approaches code this content either by temporally modulating the sensitivity of sensor pixels, or coding the scene into a lower-dimensional signal that can be captured more quickly than a traditional camera.

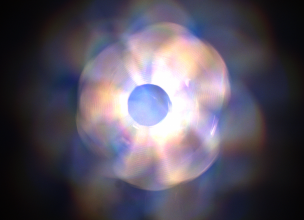

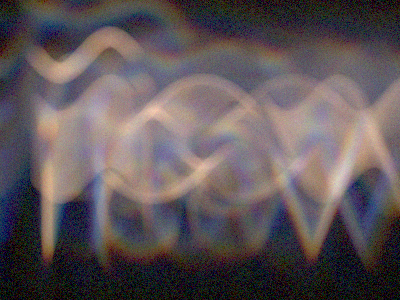

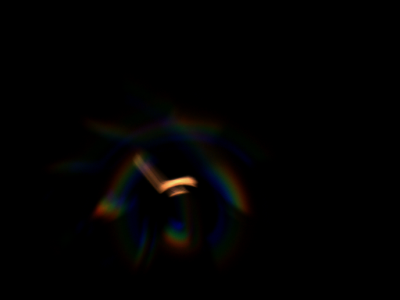

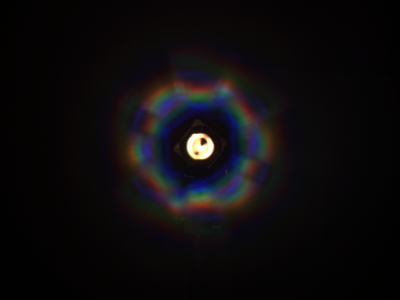

In our work, we propose an alternative technique based on temporally modulating the point-spread function (PSF) of the camera, instead of the pixels directly. In short, the signal at each instance of time leaves a slightly different response on the sensor. By examining which responses are present in the captured image, a high-speed video can be reconstructed. In our work, we focus on the simple case of simply rotating the PSF over the camera exposure, which can be implemented mechanically very simply and cheaply, and is capable of providing modulation at very fast rates. Like other approaches that utilize a broad point-spread function, our system focuses on recovering sparse scenes at high resolution. We show an illustration of the process below:

Scene sparsity

One nice aspect of temporally modulating the PSF is that such an approach can handle a wider range of sparse scenes when compared to previous approaches for recovering sparse scenes. In particular, this methodology is better able to reconstruct temporally sparse, but spatially dense scenes. This is because our methodology uses the entire sensor for the signal at each timestamp, while previous approaches only use a subset, giving our approach an edge in the amount of information that is captured.

Light efficiency

Using a rotating PSF is also much more light efficient, and robust to noise than other approaches for sparse scenes. In short, while other approaches rely on capturing just a subset of the available light, our system captures all of the available light because the entire sensor is always exposed. In addition, because the signal from different times is intermixed together on the sensor, the effect of read noise is minimized. This makes our approach effective in low-light scenarios.

Real results

With our prototype hardware setup, we captured a number of real scenes for a final video rate of 6583.10 FPS. Our setup is suitable for a number of generic high-speed scenes.A striped ball bouncing off a board. Notice the change of rotation of the ball.

A toy bullet bounces off a horizontal board.

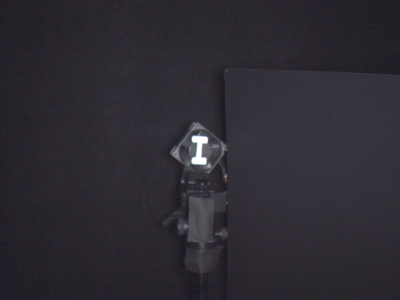

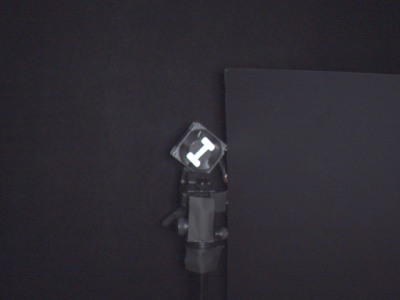

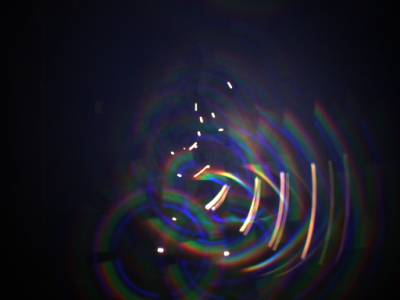

An "I" is spun roughly 120 degrees over the camera exposure.

A person swings an oversized rubber band against the ground.

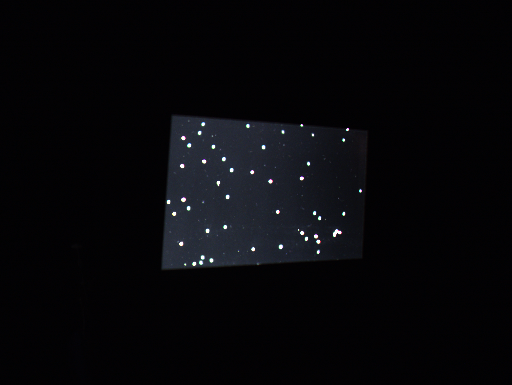

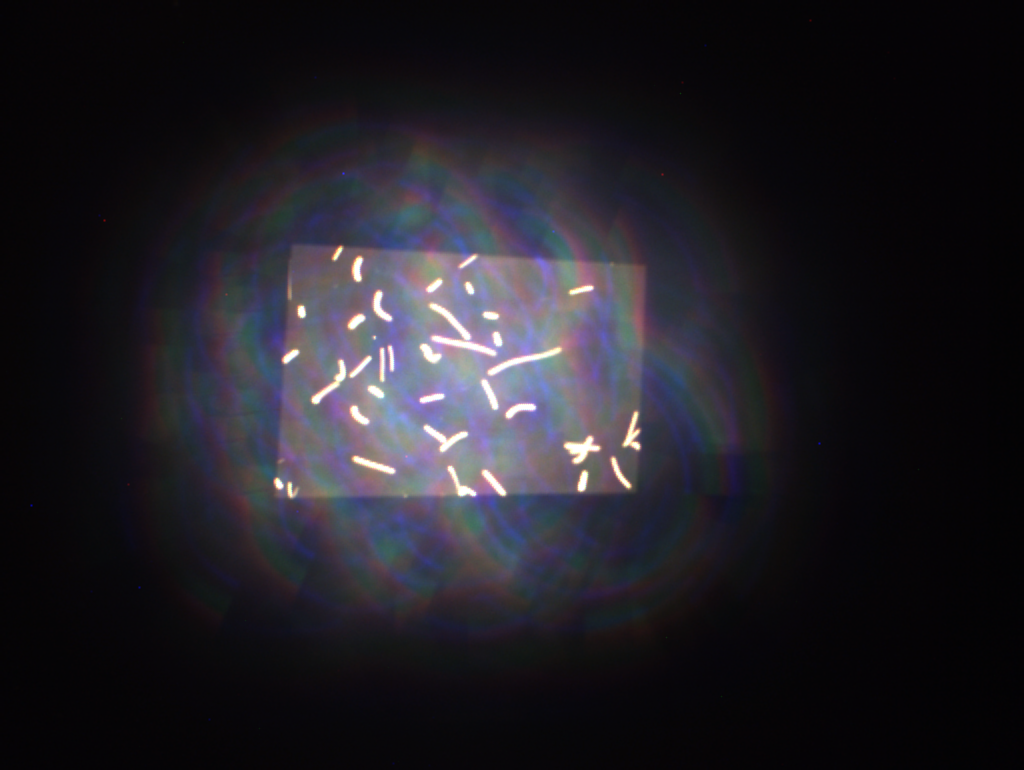

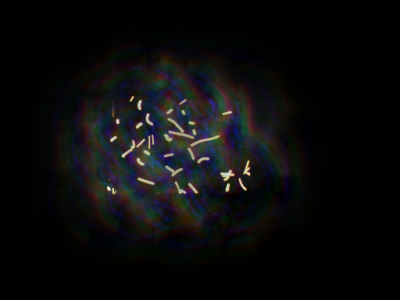

Particle image velocimetry - tracking the movement of sparse points for measuring fluid flow.

All results (with parameters)

Acknowledgements

We thank Amitabh Vyas and Ioannis Gkioulekas for fruitful discussions, and Justin Macey for lending us the motion capture equipment. Matthew O'Toole was supported by a National Science Foundation CAREER award (IIS 2238485).