Presenters

Dartmouth College

Carnegie Mellon University

Description

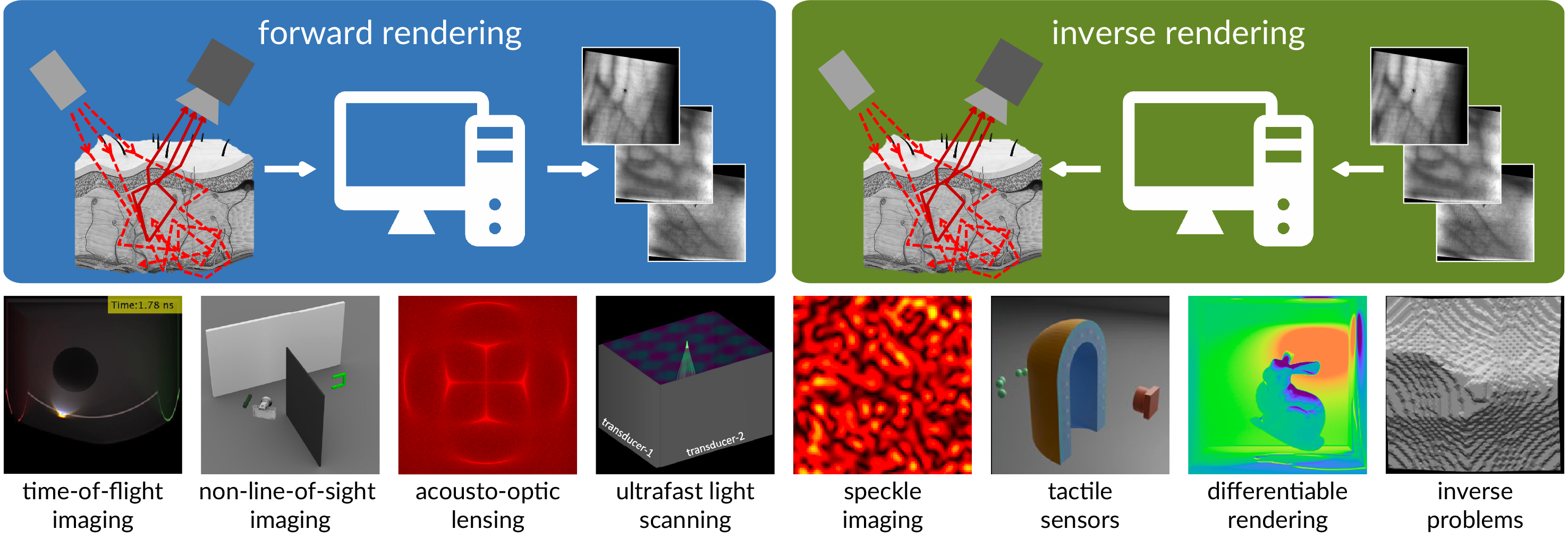

Physics-based rendering algorithms simulate photorealistic radiometric measure-ments captured by a variety of sensors, including conventional cameras, time-of-flight sensors, lidar, and so on. They do so by computationally mimicking the flow of light through a mathematical representation of a virtual scene. This capability has made physics-based rendering a key ingredient in inferential pipelines for computational photography, computer vision, and computer graphics applications. For example, forward renderers can be used to simulate new camera systems or optimize the design of existing ones. Additionally, they can generate datasets for further training and optimization of tailored post-processing algorithms, jointly with hardware in an end-to-end fashion. Differentiable renderers can be used to backpropagate through image losses involving complex light transport effects. This makes it possible to solve previously intractable analysis-by-synthesis problems, and to incorporate physics-based simulation modules into probabilistic inference, deep learning, and generative pipelines. The goal of this tutorial is to introduce physics-based rendering, and highlight relevant theory, algorithms, implementations, and current and future applications in computer vision and related areas. This material should help equip computer vision researchers and practitioners with the necessary background for utilizing state-of-the-art rendering tools in a variety of exciting applications in vision, graphics, computational photography, and computational imaging.

Agenda

Times are approximate. Slides for each tutorial section are available below.

| Time (PT) | Topic | Presenter |

|---|---|---|

| 1:30 - 1:35 pm | Welcome and introduction | |

| 1:35 - 1:45 pm | Primer on physics-based rendering | Ioannis Gkioulekas |

| 1:45 - 2:15 pm | Time-of-flight and non-line-of-sight imaging | Adithya Pediredla |

| 2:15 - 2:40 pm | Acousto-optics | Adithya Pediredla |

| 2:40 - 3:00 pm | Ultrafast lenses | Adithya Pediredla |

| 3:00 - 3:30 pm | Coffee break | |

| 3:30 - 3:50 pm | Speckle and fluorescence imaging | Ioannis Gkioulekas |

| 3:50 - 4:10 pm | Vision-based tactile sensors | Ioannis Gkioulekas |

| 4:10 - 4:30 pm | Differentiable rendering | Ioannis Gkioulekas |

| 4:30 - 4:40 pm | Inverse rendering problems | Ioannis Gkioulekas |

| 4:40 - 4:50 pm | Take-home messages | Adithya Pediredla |

| 4:50 - 5:00 pm | Wrap-up and Q & A |

Recording

Slides

The slides for this tutorial are available here.

References

The following is a list of references to papers and software we highlight in each section of the tutorial. It is not an exhaustive, or even representative, bibliography for the corresponding research areas. For pointers to additional resources, we recommend perusing the bibliography of these references.

Background on physics-based rendering

- Physics-based Rendering

Ioannis Gkioulekas

course at Carnegie Mellon University

Time-of-flight rendering

- Ellipsoidal Path Connections for Time-gated Rendering [code]

Adithya Pediredla, Ashok Veeraraghavan, Ioannis Gkioulekas

ACM Transactions on Graphics (SIGGRAPH), 2019

Non-line-of-sight imaging

- SNLOS: Non-line-of-sight scanning through temporal focusing

Adithya Pediredla, Akshat Dave, Ashok Veeraraghavan

International Conference on Computational Photography (ICCP), 2019

Acousto-optics

- Path Tracing Estimators for Refractive Radiative Transfer [code]

Adithya Pediredla, Ashok Veeraraghavan, Ioannis Gkioulekas

ACM Transactions on Graphics (SIGGRAPH Asia), 2020 - Overcoming the Tradeoff Between Confinement and Focal Distance Using Virtual Ultrasonic Optical Waveguides

Matteo Giuseppe Scopelliti, Hengji Huang, Adithya Pediredla, Srinivasa Narasimhan, Ioannis Gkioulekas, Maysam Chamanzar

Optics Express, 2020 - Optimized Virtual Optical Waveguides Enhance Light Throughput in Scattering Media [code]

Adithya Pediredla, Ashok Veeraraghavan, Ioannis Gkioulekas

under review

Ultrafast lenses

- Megahertz Light Steering without Moving Parts

Adithya Pediredla, Maysam Chamanzar, Srinivasa Narasimhan, Ioannis Gkioulekas

IEEE/CVF International Conference on Computer Vision and Pattern Recognition (CVPR), 2023

Speckle and fluorescence imaging

- A Monte Carlo Framework for Rendering Speckle Statistics in Scattering Media [code]

Chen Bar, Marina Alterman, Ioannis Gkioulekas, Anat Levin

ACM Transactions on Graphics (SIGGRAPH), 2019 - Rendering Near-Field Speckle Statistics in Scattering Media

Chen Bar, Ioannis Gkioulekas, Anat Levin

ACM Transactions on Graphics (SIGGRAPH Asia), 2020 - Single Scattering Modeling of Speckle Correlation

Chen Bar, Marina Alterman, Ioannis Gkioulekas, Anat Levin

International Conference on Computational Photography (ICCP), 2021 - Imaging with Local Speckle Intensity Correlations: Theory and Practice [code]

Marina Alterman, Chen Bar, Ioannis Gkioulekas, Anat Levin

ACM Transactions on Graphics 2021

Vision-based tactile sensors

- Langevin Monte Carlo Rendering with Gradient-based Adaptation [code]

Fujun Luan, Shuang Zhao, Kavita Bala, Ioannis Gkioulekas

ACM Transactions on Graphics (SIGGRAPH), 2020

Differentiable rendering

- A Differential Theory of Radiative Transfer [code]

Cheng Zhang, Lifan Wu, Changxi Zheng, Ioannis Gkioulekas, Ravi Ramamoorthi, Shuang Zhao

ACM Transactions on Graphics (SIGGRAPH Asia), 2019 - Path-Space Differentiable Rendering [code]

Cheng Zhang, Bailey Miller, Kai Yan, Ioannis Gkioulekas, Shuang Zhao

ACM Transactions on Graphics (SIGGRAPH), 2020

Inverse rendering problems

- Inverse Volume Rendering with Material Dictionaries [code]

Ioannis Gkioulekas, Shuang Zhao, Kavita Bala, Todd Zickler, Anat Levin

ACM Transactions on Graphics (SIGGRAPH Asia), 2013 - An Evaluation of Computational Imaging Techniques for Heterogeneous Inverse Scattering

Ioannis Gkioulekas, Anat Levin, Todd Zickler

European Conference on Computer Vision (ECCV), 2016 - Beyond Volumetric Albedo—A Surface Optimization Framework for Non-Line-of-Sight Imaging [code]

Chia-Yin Tsai, Aswin C. Sankaranarayanan, Ioannis Gkioulekas

IEEE/CVF International Conference on Computer Vision and Pattern Recognition (CVPR), 2019 - Neural Implicit Surface Reconstruction using Imaging Sonar [code]

Mohamad Qadri, Michael Kaess, Ioannis Gkioulekas

IEEE International Conference on Robotics and Automation (ICRA), 2023 - Neural Kaleidoscopic Space Sculpting [code]

Byeongjoo Ahn, Michael De Zeeuw, Ioannis Gkioulekas, Aswin C. Sankaranarayanan

IEEE/CVF International Conference on Computer Vision and Pattern Recognition (CVPR), 2023 - Adjoint Nonlinear Ray Tracing [code]

Arjun Teh, Matthew O'Toole, Ioannis Gkioulekas

ACM Transactions on Graphics (SIGGRAPH), 2022

Monte Carlo PDE simulation

- Walk on Stars: A Grid-Free Monte Carlo Method for PDEs with Neumann Boundary Conditions

Rohan Sawhney, Bailey Miller, Ioannis Gkioulekas, Keenan Crane

ACM Transactions on Graphics (SIGGRAPH), 2023 - Boundary Value Caching for Walk on Spheres

Bailey Miller, Rohan Sawhney, Keenan Crane, Ioannis Gkioulekas

ACM Transactions on Graphics (SIGGRAPH), 2023

Sponsors

This tutorial was supported by NSF awards 1730147, 1900849, 2008123, a Sloan Research Fellowship for Ioannis Gkioulekas, and a Burke Award for Adithya Pediredla. We are also grateful to the sponsors of the individual research projects presented in this tutorial (NSF, ONR, DARPA, Amazon Web Services).